How can we judge health and science information?

As with media, this ranges from the basic aspects of how scientists form and test hypotheses (summarized at the beginning of this section) to the mechanics of how health and science research is funded, reviewed, published, disseminated and covered by journalists. Some educators have defined these skills as “scientific literacy”" but many of them are very similar to those we associate with media and digital literacy. For example, Paul Hurd, who has popularized the term scientific literacy, describes a scientifically literate person as someone who, among other skills and habits:

- Distinguishes experts from the uninformed

- Distinguishes theory from dogma, and data from myth and folklore

- Recognizes that almost every facet of one’s life has been influenced in one way or another by science/technology

- Distinguishes science from pseudo-science such as astrology, quackery, the occult and superstition

- Distinguishes evidence from propaganda, fact from fiction, sense from nonsense, and knowledge from opinion

- Knows how to analyze and process information to generate knowledge that extends beyond facts

- Recognizes when one does not have enough data to make a rational decision or form a reliable judgment

- Recognizes that scientific literacy is a process of acquiring, analyzing, synthesizing, coding, evaluating and utilizing achievements in science and technology in human and social contexts

- Recognizes the symbiotic relationships between science and technology and between science, technology and human affairs[1]

This section will describe four key ways of achieving these goals: being an informed reader of science news; identifying scientific consensus on an issue; evaluating the authority of a source (either an organization or an individual) making a claim; and performing specialized searches that only deliver results from authoritative sources.

Being an informed reader

The biggest area of overlap between digital media literacy and scientific literacy is the importance of understanding how scientific work gets communicated and eventually reported as news. Science writer Emily Willingham gives five tips for reading science news:

- Go beyond the headline: Headlines, which are often written by people other than the person who wrote the article, simplify issues to emphasize the most sensational aspects of a story – which may not be the most important – and can be misleading. Read the article to get the whole story.

- Look for the basis of the article: Is it about new research, or connecting existing research to a new story? Read through the story to find out what it’s based on, and watch out for words like “review,” “perspective,” or “commentary” – these usually mean no original research has been done. As well, don’t put too much stock in research announced at conferences, as this hasn’t usually been through the peer-review process.

- Look at what the research is measuring: Is it an “association,” a “risk,” a “link” or a “correlation”? Any of those words suggest that we can’t draw any real conclusions yet, because they only mean there is a relationship between two or things. Sometimes, of course, correlation (the relationship between two events) does show causation (where one event causes the other), but usually the first findings that make the news haven’t shown that.

- Look at the original source: Does the story have a link to the original research? If so, follow it so you can find out where it was published. If not, do a search for the title of the paper. If you don’t have that, search for the authors and subject of the research.

- If the research was published in a scientific journal, do a Google search for the journal’s name plus the words “impact factor” to see if other scientists draw on it (anything above a 1 shows that the source is a part of the scientific community; if the search doesn’t show an impact factor at all, that means the source isn’t considered an academic journal).

- When someone is citing information from a database, check to see the standards for what is included in it. For example, the VAERS database is heavily used to provide examples of supposed negative effects of vaccines. But because anyone can add an entry to the database, without providing any evidence, its contents are basically useless in judging the possible effects of vaccines.[2]

- Keep commercial considerations in mind: News outlets want readers; researchers need funding; the institutions that fund them often want publicity. Even when a science story is entirely legitimate, and not someone trying to scare you into buying a book or some nutritional supplements, there are lots of reasons why the importance or implications of new research might be exaggerated by anyone in that chain.[3] Marion Nestle, in her book Unsavory Truth: How Food Companies Skew the Science of What We Eat, suggests that “whenever I see studies claiming benefits from a single food, I want to know three things: whether the results are biologically plausible; whether the study controlled for other dietary, behavioral, or lifestyle factors that could have influenced its result; and who sponsored it.”[4]

Similarly, Joel Best, in his book Damned Lies and Statistics, suggests that “there are three basic questions that deserve to be asked whenever we encounter a new statistic”:[5]

- Who created this statistic? Is it from a disinterested source (for example, Statistics Canada or the Pew Research Institute) or from an interest group? If the latter, would they have been more concerned with avoiding false positives (which might make an issue or phenomenon seem bigger than it is) or false negatives (which make it seem smaller)?

- Why was this statistic created? Was it part of a recurring survey, a study done out of scientific interest or to draw attention to an issue?

- How was this statistic created? If a statistic seems particularly shocking, it may be worth looking at the original study or survey to see if it’s being reported accurately. For instance, a 2022 study about Ontario students’ knowledge about antisemitism was widely reported as showing that a third believed that the Holocaust didn’t happen or that it had been exaggerated. In fact, only ten percent said this (though that is obviously still far too many) while 22 percent said they were “not sure what to answer.”[6]

Best points out, though, that “we should not discount a statistic simply because its creators have a point of view, because they view a social problem as more or less serious. Rather, we need to ask how they arrived at the statistic. … Once we understand that all social statistics are created by someone, and that everyone who creates social statistics wants to prove something (even if that is only that they are careful, reliable and unbiased), it becomes clear that the methods of creating statistics are key.”[7] As with media in general, then, it is the constructed nature of statistics that we need to understand.

Identifying consensus

“In an active scientific debate, there can be many sides. But once a scientific issue is closed, there’s only one ‘side.’”[8]

To put any claim in context, we need to know if there’s a consensus in that field and, if there is, how strongly it’s held. Besides judging new claims and findings, knowing the consensus helps us recognize tell the difference between contentious or novel theories, and fringe ideas like “race science,” “vaccine shedding” and flat-Earthism: “Fringe ideas are not simply ideas with a smaller than usual following. They are ideas that are not in dialogue with the profession at all.”[9]

As noted above, one of the challenges of understanding health and science topics is that both news and social networks tend to be biased towards what’s new, such as recent findings or discoveries. This can make it harder to gauge the reliability of health and science information because by definition these new findings – what is sometimes called “science-in-the-making” – don’t necessarily reflect the consensus view of the scientists in the field.[10]As noted above, there tends to be more news coverage of fields where the consensus is low, such as nutrition,[11] and articles about health and science topics often misrepresent the consensus, continuing to present topics such as human-caused climate change or health effects of tobacco as under debate long after a consensus has been reached among scientists in the field.[12]

This bias towards new findings can contribute to the misunderstanding of science as an individual rather than a collective process and can exaggerate the extent to which scientists disagree on a topic.[13]Understanding consensus also means understanding the importance of uncertainty – that “when a new study is published, its results are not taken to be the definitive answer but rather a pebble on the scale favoring one of several hypotheses” – and the role of peer review in testing findings.[14]

Groups or individuals may take advantage of this to make it seem as though there isn’t a consensus in a field where one has been reached, or that a consensus has been reached where one hasn't been: “tentativeness is part of the self-correcting aspect of science, but one that those who fault science frequently ignore.”[15] But “most science teachers and science educators are rarely convinced at first glance when new results are emanating from the frontier of science. We gladly read about new and exciting findings, but keep all possibilities open – for the time being. The reason is that we first want to know if there is some sort of consensus among the relevant experts concerning these new scientific knowledge claims.”[16]

The reason why new findings can be misleading is because consensus is all about replication: “people test hypotheses and publish their findings, and other scientists try to conduct additional experiments to show the same thing… Facts can change, and dogmas can be challenged — but one paper alone cannot do that.”[17]

In any field, consensus has three parts:

- Consilience of evidence, meaning that evidence from different sources is in overall agreement;

- Social calibration, meaning that everyone working in the field has the same standards of investigation and evidence, though mechanisms like peer review and replication;

- And social diversity, meaning that many different people and groups work in the field, representing a range of cultures and backgrounds.[18]

When we apply this to the question of whether human activity is contributing to climate change, for instance, we see that while small details might differ there is an overwhelming consilience of the evidence; that there’s overall agreement on how it happens and the work showing that has been repeatedly peer-reviewed and replicated; and that the same results have been found by scientists all around the world, with dozens of scientific societies, science academies and international bodies having published consensus statements.[19]

Some spreaders of disinformation and pseudoscience attack the idea of consensus itself, claiming that theories such as geocentrism or eugenics were once the scientific consensus or pointing to Galileo or Alfred Wegener (the originator of the theory of continental drift) as proof that consensus suppresses the truth (this is a common enough argument that it has its own name, the “Galileo gambit”). In fact, opposition to geocentrism was mostly on religious grounds, and Galileo’s scientific peers accepted his findings almost immediately;[20] while many prominent people in the early 20 th century held eugenicist beliefs, it was never near to being scientific consensus; and Wegener’s work, far from being suppressed, was published in peer-reviewed journals.[21]

For this reason, it’s important to understand not just that the scientific consensus can change, but how it happens, to avoid the misconception that everything scientists believe now will eventually be overturned. Instead, as digital media literacy expert Mike Caulfield puts it, “as a fact-checker, your job is not to resolve debates based on new evidence, but to accurately summarize the state of research and the consensus of experts in a given area, taking into account majority and significant minority views.”[22]

How do we find consensus? Search engines like Google and DuckDuckGo are generally not the best place to look for consensus. Because they search the whole internet, it can be hard to tell if the results you see come from an expert or authority on the topic. If you do use them, use the broadest possible search terms and add the word “consensus”: for instance, use “pyramids” “built” “consensus” instead of “who built the pyramids.” Remember, you’ll need to check the reliability of any search results you don’t recognize.

In general, though, an encyclopedia is generally a better place to start. That’s because encyclopedia entries are summaries of the consensus on a topic. In the case of Wikipedia, the most widely used online encyclopedia, you can actually see how that consensus was reached by looking at an article’s Talk and View History pages. (Although Wikipedia articles can be changed by users, automated editors now catch and quickly correct most cases of vandalism or ideologically motivated edits: as a result, the more controversial articles are actually more likely to be accurate.[23] See our handout Wikipedia 101 for more details on how to tell if a specific Wikipedia article is reliable.)

Another option is to look for position statements from authoritative sources like the National Academies of Sciences, Engineering and Medicine, or professional organizations like the Canadian Pediatric Society.

Here are some lists of authoritative sources on different topics. You can use these lists to find sources which you can consult or search to find the consensus on different topics:

- American scientific societies: nasa.gov/climate-change/scientific-consensus/

- American medical organizations and agencies: gov/organizations/all_organizations.html

- Canadian healthcare associations and societies: com/healthcare-societies-assoc/

- Canadian health organizations: csme.org/page/HealthOrganizations

- International healthcare associations: ipac-canada.org/associations-organizations

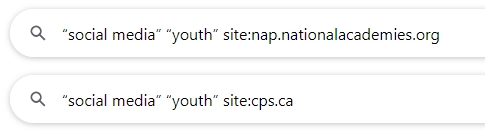

Frequently, the easiest way is to do a search on the topic and use the site: operator to limit it to that organization’s website. For instance, to find out if those two organizations have released position statements on the possible effects of social media on youth, we could do the following searches:

Both searches lead to position statements that provide an overview of current research on the topic, including to what extent there is a consensus.

It’s essential to remember, though, that any organization can claim to be authoritative or to represent professionals in a field. At first glance, a group such as the Association of American Physicians and Surgeons might seem authoritative; only by doing a search on it, or consulting Wikipedia, will we see that it’s “a politically conservative non-profit association that promotes conspiracy theories and medical misinformation, such as HIV/AIDS denialism, the abortion–breast cancer hypothesis, and vaccine and autism connections.” (It’s also not found in the list of American healthcare associations and societies listed above.) As with any source, make sure that an organization or institution is reliable and authoritative before consulting them.

Specialized sources

Another strategy is to take the opposite approach and confine your search to sources that you already know are reliable and reflect the scientific consensus. This requires a bit more expert knowledge but can save you a lot of time and can prevent you from (consciously or unconsciously) seeking out information that you want to be true or that supports what you already believe.

Evaluating authority

While it’s not always possible to judge the reliability of a specific scientific or medical claim, it is usually possible to judge the authority of the person making it: “Students should first look for evidence about the trustworthiness of the source, not the claim.”[24] As with our judgments of claims, though, our judgments of authority are often influenced by whether or not we agree with the supposed expert. One study found that people rated the authority of experts with the same credentials differently based on whether or not the expert’s opinion matched their own.[25] At the same time, promoters of misinformation rely on supposed experts to bolster the credibility of their claims.[26] So how do we determine if someone really is an authority? How can we tell the difference between someone who may have genuine data challenging the scientific consensus and a crank with no standing in the scientific community?

It’s important to understand that being an “expert” may be limited to having expertise on a very specific subject. The popular distinction between scientists and non-scientists can obscure the fact that most scientists have little specialized knowledge outside of their area: while physicists may know more about biology than someone without any scientific education, for example, they are unlikely to know enough to make or judge claims in that field.

The first step, then, is to make sure that an expert’s credentials are actually in the relevant area. This may require a bit of additional research. For example, Dr. Joseph Mercola, whose website promotes (and sells) supplements both for nutrition and as an alternative to vaccines, has a doctorate in osteopathy, a branch of medicine that focuses on the muscles and skeleton – not nutrition or the immune system.

If the person claiming authority is a scientist, you can also find out if they have a publication history in the field by doing a search for their name on Google Scholar. For instance the global warming denialist blog Principia Scientific ran a story about a paper by Christopher Booker that claims the scientific consensus was a result not of evidence but of “groupthink;”[27] Google Scholar shows that Booker has published works promoting conspiracy theories about climate change, wind power and the European Union, but no scientific work on these or any other topics.

As well, an expert’s authority can be compromised if there’s reason to think their judgment isn’t objective. It’s important to make sure the authority isn’t compromised for ideological or commercial reasons, so find out who is paying for their platform. If the funder has political or financial reasons for wanting you to believe the expert – or if who is funding it isn’t clear – you should treat it with additional skepticism.[28]

You can use similar methods to determine if an organization or publication is an authority. As with other types of information, you first need to “go upstream” to determine where a claim was first published. Once you’ve determined its origin, you can do a search with the methods listed above and in other sections to see if the source is well-regarded and if there is any reason to consider it biased. For example, the American Academy of Pediatrics and the American College of Pediatricians both represent themselves as authoritative bodies on pediatrics, but a few moments’ research shows that the AAP has 67,000 members while the ACP has only 700.

For scientific articles, you can also find out the journal’s impact factor (see “Being an Informed Reader” above) to see if other scientists draw on it, and do a search for the title of the paper with the words “replicated” or “retracted” to see what other scientists have found about it. For example, a search for “Wakefield autism retracted” will show that Lancet, the journal that originally published the article linking the MMR vaccine with autism, eventually retracted the article, describing it as an “elaborate fraud.”[29]

[1] Hurd, P. D. (1998). Scientific literacy: New minds for a changing world. Science Education, 82(3), 407-416. doi:10.1002/(sici)1098-237x(199806)82:33.3.co;2-q

[2] Meyerson, L., & McAweeney E. (2021) How Debunked Science Spreads. Virality Project.

[3] Willingham, E. (2012). Science, health, medical news freaking you out? Do the Double X Double-Take first. Double X Science.

[4] Nestle, M. (2018). Unsavory truth: how food companies skew the science of what we eat. Basic Books.

[5] Best, J. (2012). Damned lies and statistics: Untangling numbers from the media, politicians, and activists. Univ of California Press.

[6] Lerner, A. (2022) 2021 Survey of North American Teens on the Holocaust and Antisemitism. Liberation75.

[7] Best, J. (2012). Damned lies and statistics: Untangling numbers from the media, politicians, and activists. Univ of California Press.

[8] Oreskes, N., & Conway, E. M. (2010). Defeating the merchants of doubt. Nature, 465(7299),

[9] Caulfield, M., & Wineburg, S. (2023). Verified: How to think straight, get duped less, and make better decisions about what to believe online. University of Chicago Press.

[10] Kolstø, S. D. (2001). Scientific literacy for citizenship: Tools for dealing with the science dimension of controversial socioscientific issues. Science Education, 85(3), 291-310. doi:10.1002/sce.1011

[11] Nestle, M. (2018) Unsavory Truth: How Food Companies Skew the Science of What We Eat. Basic Books.

[12] Oreskes, N., & Conway, E. M. (2011). Merchants of doubt: How a handful of scientists obscured the truth on issues from tobacco smoke to global warming. Bloomsbury Publishing USA.

[13] Caulfield, M. (2017). "How to Think About Research" in Web Literacy for Student Fact-Checkers. < https://webliteracy.pressbooks.com/>

[14] Bergstrom, C. (2022) To Fight Misinformation, We Need to Teach That Science Is Dynamic. Scientific American.

[15] McComas, W. F. (1998). The principal elements of the nature of science: Dispelling the myths. In The nature of science in science education: Rationales and strategies (pp. 53-70). Dordrecht: Springer Netherlands.

[16] Kolstø, S. D. (2001). Scientific literacy for citizenship: Tools for dealing with the science dimension of controversial socioscientific issues. Science Education, 85(3), 291-310. doi:10.1002/sce.1011

[17] Shihipar, A. (2024) My Fellow Scientists Present Research Wrong. Bloomberg.

[18] Cook, J. (n.d.) Explainer: Scientific consensus. Skeptical Science.

[19] Nd. Scientific Consensus. National Aeronautics and Space Administration. < https://science.nasa.gov/climate-change/scientific-consensus/>

[20] Livio, M., (2020) When Galileo Stood Trial for Defending Science. History.com.

[21] Oreskes, N. (2019) Why Trust Science? Princeton University Press.

[22] Caulfield, M. (2017). "How to Think About Research" in Web Literacy for Student Fact-Checkers. < https://webliteracy.pressbooks.com/>

[23] Mannix, L. (2022) Evidence suggests Wikipedia is accurate and reliable. When are we going to start taking it seriously? The Sydney Morning Herald.

[24] Zucker, A., & McNeill, E. (2023). Learning to find trustworthy scientific information.

[25] Mooney, C. (2011). The Science of Why We Don't Believe Science. Mother Jones.

[26] Harris, M. J., Murtfeldt, R., Wang, S., Mordecai, E. A., & West, J. D. (2024). Perceived experts are prevalent and influential within an antivaccine community on Twitter. PNAS nexus, 3(2), pgae007.

[27] Leuck D. (2018). Global Warming: The Evolution of a Hoax. Principia Scientific.

[28] Massicotte, A. (2015). When to trust health information posted on the Internet. Canadian Pharmacists Journal / Revue Des Pharmaciens Du Canada,148(2), 61-63. doi:10.1177/1715163515569212

[29] No author listed. (2011, January 5.) Retracted autism study an ‘elaborate fraud,’ British journal finds. CNN.